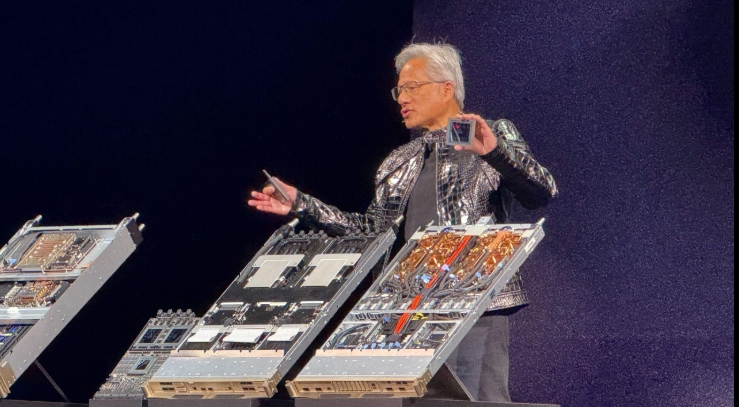

At CES 2026 in Las Vegas, Nvidia CEO Jensen Huang took the stage to kick off the year with major announcements centered on the next generation of AI hardware and a bold vision for “physical AI”—AI that doesn’t just think, but interacts with and understands the real world.

The Vera Rubin Platform: Nvidia’s Next AI Supercomputer

The star of the show was the Vera Rubin platform (often referred to as the Rubin platform), named after the pioneering astronomer Vera Rubin. This is Nvidia’s successor to the blockbuster Blackwell architecture and marks a new era with extreme co-design across six specialized chips:

- Vera CPU

- Rubin GPU

- NVLink 6 Switch

- ConnectX-9 SuperNIC

- BlueField-4 DPU

- Spectrum-6 Ethernet Switch

Huang announced that the Vera Rubin platform is already in full production, ahead of some expectations, with volume ramp-up expected in the second half of 2026. Key highlights:

- Up to 10x reduction in inference token costs compared to Blackwell.

- 4x fewer GPUs needed for training mixture-of-experts (MoE) models.

- Massive scalability: Systems like the NVL72 rack can deliver enormous performance boosts for AI training and inference.

Major cloud providers including AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure are lined up to deploy Vera Rubin-based instances later this year.

Physical AI: Bringing Intelligence to the Real World

Huang emphasized “physical AI” as the next frontier—AI systems that perceive, reason, and act in physical environments, from robots to autonomous vehicles.Central to this is the Nvidia Cosmos platform, a suite of open world foundation models designed for simulating real-world physics and generating synthetic data:

- Cosmos Reason 2: A top-performing reasoning vision-language model (VLM) for better physical understanding.

- Cosmos Transfer 2.5 and Predict 2.5: Models for large-scale synthetic video generation and robot policy evaluation.

These models enable training in virtual worlds before deploying to real hardware, accelerating development for robotics and autonomy.

Mercedes-Benz Partnership: AI-Driven Cars Hit the Road This Year

One of the most tangible demos was Nvidia’s deepened collaboration with Mercedes-Benz. The all-new Mercedes-Benz CLA will be the first production vehicle to feature Nvidia’s full DRIVE AV software stack, powered by advanced reasoning models like Alpamayo.

- Launching with enhanced Level 2+ point-to-point driver assistance.

- Capable of navigating complex urban environments (e.g., San Francisco streets) with features like unprotected turns and proactive avoidance.

- Expected on U.S. roads by the end of 2026, with expansions to Europe and Asia following.

Huang showcased videos of the CLA driving autonomously, highlighting how it “learns directly from human demonstrators” for natural, safe behavior.

Why This Matters

Nvidia is positioning itself not just as a chip maker, but as the foundation for the entire physical AI ecosystem from data centers to edge devices, robots, and cars. With open models on Hugging Face and partnerships across industries, the company is accelerating a shift where AI moves from chatbots to agents that interact with the physical world.

As demand for AI compute explodes, the Vera Rubin platform’s efficiency gains could help address energy concerns while keeping Nvidia at the forefront of the AI race.

CES 2026 is just getting started. Stay tuned for more breakthroughs in AI and robotics!